Multimodal RAG for Comprehensive PDF Document Processing

The ability to efficiently extract and interpret information from PDF documents is crucial in the ever-expanding digital landscape. The Multimodal RAG (Retrieval-Augmented Generation) system integrates advanced Optical Character Recognition (OCR), Natural Language Processing (NLP), and machine learning technologies to offer a sophisticated platform for processing PDF documents. This blog post delves into the core functionalities and setup process of Multimodal RAG, with a special focus on its image extraction and conversion capabilities.

Introduction to Multimodal RAG

Multimodal RAG is designed to make both text and image data in PDF documents fully accessible and analyzable. It employs OCR to digitize text within images, NLP to understand and manipulate this text, and machine learning models to enhance data retrieval and analysis, ensuring a thorough understanding of document contents.

Core Features of Multimodal RAG

The platform's key features include:

- PDF and Image Processing: Automatic extraction of text and images from PDF files.

- Adaptive Image-to-Text Conversion: Options to use either OpenAI’s API or the LLaVA model for converting images to descriptive text.

- Advanced Text and Image Indexing: Utilizes the Chroma vector database for efficient data indexing and retrieval.

- Interactive Query Interface: A Streamlit-based interface facilitates easy exploration and querying of content.

Setup and Configuration

Requirements for the project

To get started with Multimodal RAG, the following needs to be installed if we are choosing to use OpenAI for Image to Text:

langchain==0.1.14

python-dotenv==1.0.1

unstructured[local-inference]==0.5.6

chromadb==0.4.24 # Vector storage

openai==1.16.2 # For embeddings

tiktoken==0.6.0 # For embeddings

streamlit==1.33.0

pypdf==4.1.0If you wish to use LLaVA, please see the additional requirements below (these include requirements for GPU utilization as well):

transformers==4.37.2

bitsandbytes==0.41.3

accelerate==0.25.0API Key

In .env file, add OPENAI_API_KEY=<YOURA_OAI_APIKEY>

Process Documents and Populate the Database

For text in PDFs, we are chunking them using:

def split_text(documents: list[Document]):

text_splitter = RecursiveCharacterTextSplitter(

chunk_size=300,

chunk_overlap=100,

length_function=len,

add_start_index=True,

)

chunks = text_splitter.split_documents(documents)

print(f"Split {len(documents)} documents into {len(chunks)} chunks.")

document = chunks[10]

print(document.page_content)

print(document.metadata)

return chunksExtracting Images from PDFs and Converting Them to Text

One of Multimodal RAG's standout features is its ability to handle images embedded within PDF documents. Here's a breakdown of how images are extracted from PDFs and converted into text.

a) Image Extraction from PDFs

The process begins with the extraction of images from each page of the PDF. The code snippet below demonstrates how this is achieved using the pypdf library:

from pypdf import PdfReader

def extract_images(pdf_path):

pdf_reader = PdfReader(pdf_path)

images = []

for page in pdf_reader.pages:

for img_name, img_details in page.images.items():

images.append(img_details.extract_image())

return images

b) Converting Extracted Images to Text

After extracting the images, they are converted into text using either OpenAI's API or the LLaVA model. Below is a code snippet that showcases how the OpenAI API can be utilized for this purpose:

import base64

import requests

def encode_image_to_base64(image_data):

return base64.b64encode(image_data).decode('utf-8')

def convert_image_to_text(image_data, api_key):

base64_image = encode_image_to_base64(image_data)

headers = {

"Content-Type": "application/json",

"Authorization": f"Bearer {api_key}"

}

payload = {

"model": "gpt-4-vision-preview",

"messages": [{

"role": "user",

"content": [{

"type": "text",

"text": "What’s in this image?"

}, {

"type": "image_url",

"image_url": {

"url": f"data:image/jpeg;base64,{base64_image}"

}

}]

}],

"max_tokens": 300

}

response = requests.post("https://api.openai.com/v1/chat/completions", headers=headers, json=payload)

return response.json()["choices"][0]["message"]["content"]

These generated texts (chunks) will be processed exactly like the way we handle the detected texts in pdf (classical RAGs).

Start the Streamlit application

If you are using "Dockerized" solution, don’t forget to add -p 8501:8501 as streamlit opens on 8501 by default.

streamlit run app.py- After sending your query, the app will search through the embeddings created from texts and images in the PDF documents.

- You will then receive a response along with the top 3 sources from which the information was extracted

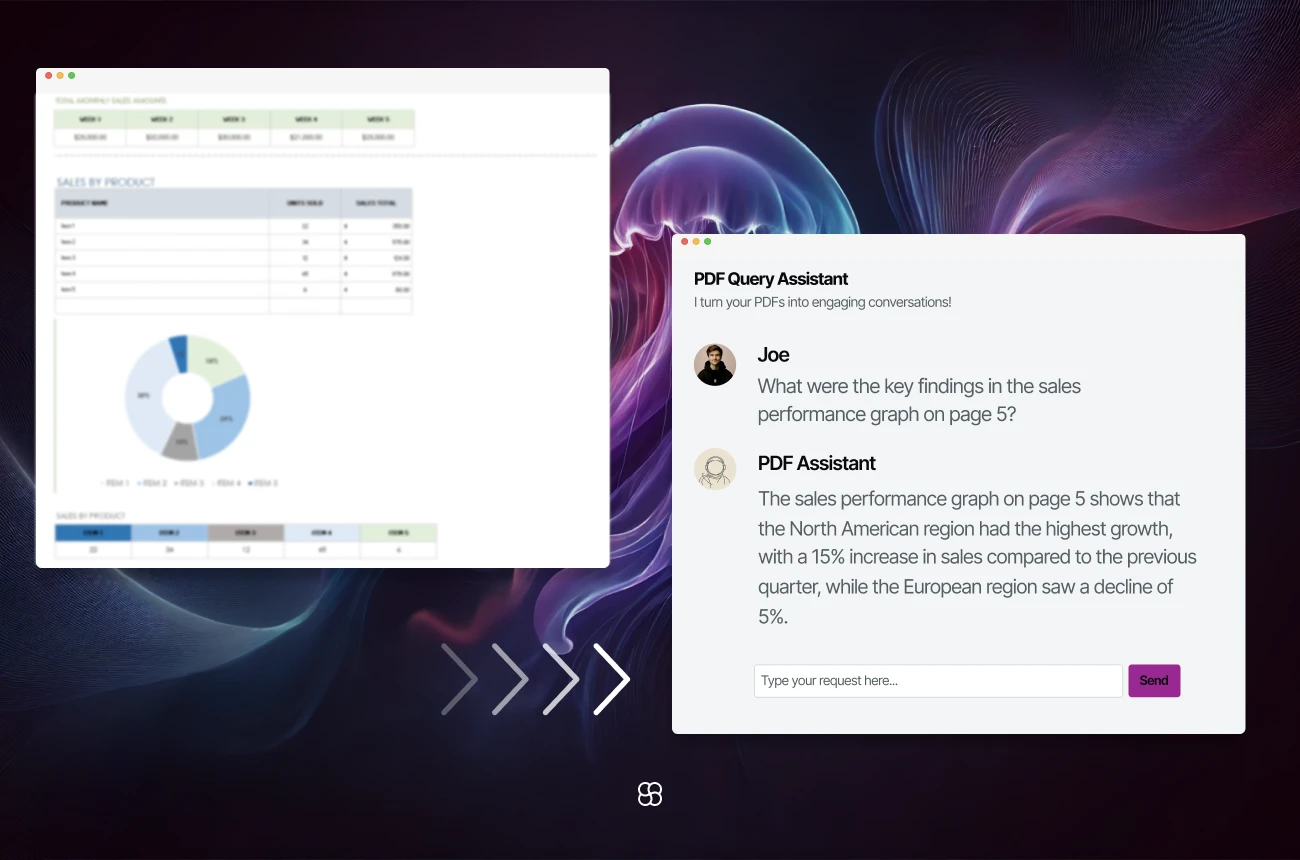

Demo Snippet and Video

As demonstrated in the video below, we addressed a question where the required information was contained within a graphic rather than text. The RAG system successfully provided the correct answer, complete with relevant sources!

Check out our Github page for more code snippets and a complete app implementation. Share comments and thoughts with us at hello@cohorte.co.

Conclusion

Multimodal RAG is a powerful tool for enhancing document processing workflows. Its advanced capabilities include extracting and converting images from PDF documents to text. By using this guide, users can leverage OCR, NLP, and machine learning to extract and analyze data more efficiently and effectively.