Mistral OCR: A Deep Dive into Next-Generation Document Understanding

Mistral OCR is an advanced Optical Character Recognition API introduced by Mistral AI as part of their AI ecosystem. It sets a new standard in document understanding, going far beyond traditional OCR tools. Unlike classical OCR that only extracts plain text, Mistral OCR comprehends the structure and context of each document element – including images, tables, equations, and complex layouts – with unprecedented accuracy. This means it not only reads text but also preserves document hierarchy (headers, paragraphs, lists, table structures) and formatting in the output.

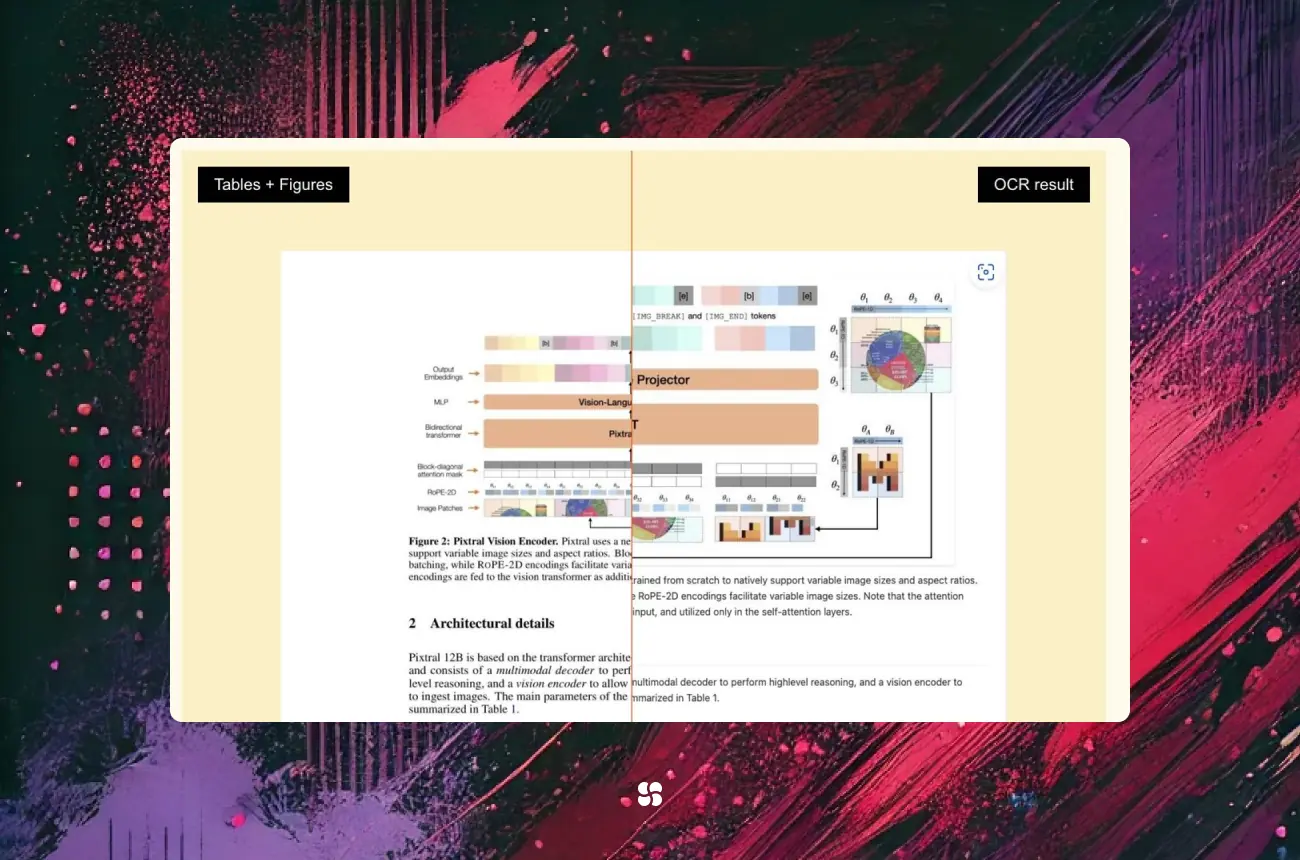

Key Capabilities: Mistral OCR can handle a wide range of document types (scanned PDFs, images, photos of documents, etc.) and maintains the original document structure in its results. It outputs recognized content in structured formats like Markdown or JSON, interleaving text with images where appropriate. This allows you to retain headings, tables, and figure references in the extracted data, making the output immediately useful for downstream applications. The model is also natively multilingual and can recognize thousands of languages and scripts, making it suitable for global use cases.

Performance: According to Mistral AI, their OCR engine achieves state-of-the-art accuracy. In internal benchmarks it reached ~94.9% accuracy across diverse document types, outperforming other solutions like Google Document AI (83.4%) and Azure OCR (89.5%). It’s designed for speed and scale – capable of processing up to 2,000 pages per minute on a single GPU node. In practical terms, enterprises can feed large volumes of documents without significant delays. The API is cloud-based but also offers options for on-premises deployment for organizations with strict data compliance needs. (Mistral has stated that self-hosted deployments are selectively available for handling highly sensitive data.)

Use Cases: The robust capabilities make Mistral OCR applicable in many industries. It excels at complex documents that mix text, images, and structured data. For example, financial services can use it to extract data from invoices, receipts, and bank statements; law firms can digitize contracts and court documents; healthcare providers can transcribe handwritten doctors’ notes or forms; and logistics companies can process shipping forms and bills of lading. In each case, the OCR output preserves structure, enabling easier analysis (tables remain tables, sections remain sections, etc.). The model even handles challenging inputs like mathematical formulas or diagrams in research papers, which opens up use cases like academic paper analysis and archival digitization. In short, Mistral OCR is positioned as a document understanding solution rather than a basic text extractor, integrating visual and textual AI to deliver rich, ready-to-use data.

Setup and Installation

Getting started with Mistral OCR is straightforward. The service is accessible via an API, and Mistral provides an official Python SDK (mistralai) to simplify integration. It’s also accessible through Hugging Face’s ecosystem – the Mistral team plans to offer the model via Hugging Face Inference Endpoints and other partners, in addition to their own platform. For most developers, the steps to set up include installing the SDK, obtaining an API key, and writing a few lines of code to connect.

1. Install the Mistral SDK: Ensure you have Python 3.9+ in your environment. Install the required packages via pip:

pip install mistralai python-dotenv datauriThe mistralai package is Mistral’s official client library, while python-dotenv helps load environment variables (for managing your API key), and datauri will help handle image data URI encoding/decoding in some examples. It’s recommended to use a virtual environment (e.g. via venv or Conda) to avoid dependency conflicts. For instance, using Conda:

conda create -n mistral python=3.9 -y

conda activate mistral

pip install mistralai python-dotenv datauriThis creates and activates a clean environment and installs the SDK and helpers.

2. Obtain an API Key: Mistral OCR is a hosted service, so you need to sign up on Mistral’s developer platform (called La Plateforme) to get credentials. Log in to the Mistral console and navigate to the API Keys section. Click “Create new key”, give it an optional name and expiry, and copy the generated key. Store this key securely – for example, save it in a .env file:

MISTRAL_API_KEY=<your_api_key_here>Using a .env file keeps the key out of your code. Be sure to add this file to .gitignore if you use version control, so you don't accidentally publish your credentials.

3. Basic Configuration: In your Python script or notebook, load the API key and initialize the Mistral client:

from mistralai import Mistral

from dotenv import load_dotenv

import os

load_dotenv() # loads the .env file

api_key = os.environ["MISTRAL_API_KEY"]

client = Mistral(api_key=api_key)This uses the SDK to create a Mistral client instance authenticated with your API key. Now you're ready to call the OCR API through this client.

4. (Optional) Hugging Face Integration: While the primary access is via Mistral’s API, the company has indicated the OCR model will be available through Hugging Face and other cloud partners as well. This means you may soon be able to use Hugging Face’s transformers or text-generation-inference endpoints to run Mistral OCR if you prefer. Additionally, the open-source Mistral-7B foundation model (which underpins their LLMs) is already on Hugging Face, and community projects have started building OCR solutions on top of it. However, for full OCR functionality with structured outputs, using Mistral’s API/SDK is the recommended approach currently.

Finally, note that the Mistral OCR API is a paid service (as of 2025, pricing is about $1 per 1000 pages, i.e. $0.001 per page). Documents up to 50 MB or 1000 pages are supported per request. This pricing is quite competitive given the advanced capabilities.

First Steps and Usage Examples

Once setup is done, you can start performing OCR on documents with just a few lines of code. Mistral OCR can process both PDFs and images. We'll walk through examples of each, demonstrating how to extract text and structured content from these sources.

OCR on a PDF Document

The simplest way to use the API is to provide a URL to a PDF file. For example, let's use a publicly available PDF (an academic paper from arXiv) and run it through Mistral OCR:

# Perform OCR on a PDF by URL

ocr_response = client.ocr.process(

model="mistral-ocr-latest",

document={

"type": "document_url",

"document_url": "https://arxiv.org/pdf/2501.00663" # sample PDF

}

)

print(ocr_response)Here we call client.ocr.process with the model name ("mistral-ocr-latest" ensures we use the latest OCR model version) and a document parameter specifying a URL. The SDK will fetch that PDF and process it. The result ocr_response is a Python object (of type OCRResponse) containing the extracted content and metadata.

The ocr_response contains a list of pages, accessible via ocr_response.pages. Each page is an object with fields like markdown (the OCR text in Markdown format) and images (any images detected on that page). For example, we can check how many pages were detected:

print(len(ocr_response.pages))

# Output: 9If we print the content of the first page:

print(ocr_response.pages[0].markdown)We will see the text from page 1, including formatting markers. For instance, the output might start like:

# Mistral 7B

Albert Q. Jiang, Alexandre Sablayrolles, ... William El Sayed

#### Abstract

We introduce Mistral 7B, a 7-billion-parameter language ...This shows that the title was recognized as a level-1 heading (# Mistral 7B), authors were captured, and an embedded image (img-0.jpeg) was noted in the Markdown. The "Abstract" heading was preserved as a level-4 heading in Markdown. Mistral OCR preserved the structure of the document remarkably well – it even detected a figure on the page and left a placeholder image reference in the text.

By default, the actual image data isn't included in the response, only references. If you need to retrieve the images (e.g., figures or diagrams in the PDF), you can request that. Mistral’s API supports an include_image_base64 flag to embed images in the result. For example:

ocr_response = client.ocr.process(

model="mistral-ocr-latest",

document={

"type": "document_url",

"document_url": "https://arxiv.org/pdf/2310.06825"

},

include_image_base64=True

)With include_image_base64=True, each OCRImageObject in ocr_response.pages[i].images will have an image_base64 field containing the image data encoded in base64. You can then save these images to files. For instance:

from datauri import parse

def save_image(ocr_image):

data = parse(ocr_image.image_base64)

with open(ocr_image.id, "wb") as f:

f.write(data.data)

# Save all images from the OCR result

for page in ocr_response.pages:

for img in page.images:

save_image(img)This will write out files like img-0.jpeg, img-1.jpeg, etc., which were embedded in the PDF. By updating our Markdown output to include these image files, we can recreate a rich representation of the document (text + images).

Processing Local PDFs: What if your PDF is not online? The SDK provides a way to upload files. You can use client.files.upload() to send a local PDF to Mistral’s server and get back an internal URL to use in ocr.process. For example:

# Upload a local PDF and get a temporary URL

filename = "report.pdf"

uploaded = client.files.upload(

file={"file_name": filename, "content": open(filename, "rb")},

purpose="ocr"

)

signed_url = client.files.get_signed_url(file_id=uploaded.id)

# Now use that URL in the OCR request

ocr_response = client.ocr.process(

model="mistral-ocr-latest",

document={

"type": "document_url",

"document_url": signed_url.url

},

include_image_base64=True

)Under the hood, uploading the file first returns a file ID and then a signed URL that the OCR engine can access. This two-step process is a bit more involved, but it allows you to OCR local files without a publicly accessible URL. Once the ocr_response is obtained, usage is the same as before.

OCR on Images

Mistral OCR can also extract text from images (like photos or scans of documents, invoices, receipts, etc.). The API usage is similar, but instead of "document_url" we use "image_url" for the document type:

ocr_response = client.ocr.process(

model="mistral-ocr-latest",

document={

"type": "image_url",

"image_url": "<URL to an image file>"

}

)If you have an image file locally (say receipt.jpeg), you can either upload it as shown for PDFs or encode it as a base64 data URI and pass it directly. A helper function to load an image file into a data URI string might look like:

import base64, mimetypes

def load_image(image_path):

mime_type, _ = mimetypes.guess_type(image_path)

with open(image_path, "rb") as image_file:

image_data = image_file.read()

base64_encoded = base64.b64encode(image_data).decode('utf-8')

data_url = f"data:{mime_type};base64,{base64_encoded}"

return data_urlThis function reads the image in binary and produces a string like data:image/jpeg;base64,<lots of characters> which embeds the file conten. Now we can OCR the local image by supplying this data URI:

ocr_response = client.ocr.process(

model="mistral-ocr-latest",

document={

"type": "image_url",

"image_url": load_image("receipt.jpeg")

}

)The result will again be an ocr_response object. For an example receipt image, Mistral OCR might output something like a series of lines (item names, prices, total, etc., if it’s a shopping receipt). In a tutorial example, the output wasn’t perfect but was reasonably close to the actual receipt content. Mistral OCR can handle a lot of variation in images (including photographs of documents taken at angles, or slightly noisy scans). However, image quality still matters – clear, high-resolution images will yield the best results. If the image contains handwriting, Mistral OCR will attempt to recognize it (handwritten text is a tough challenge, but the model has some capability to handle it).

Understanding the Output Structure

Whether you process PDFs or images, the output of Mistral OCR is designed to be developer-friendly. By returning Markdown text, the OCR preserves layout in a human-readable way. You can easily convert this Markdown to HTML or plain text as needed. For instance, headings in Markdown start with #, lists with -, tables in Markdown format, etc. If structured data is needed, you have a couple of options:

- Process the Markdown: You can parse the markdown to identify sections, tables, etc., or even use an LLM to convert it into a structured format (see next section on integration).

- Request JSON (Future feature): Mistral OCR supports structured outputs and the team has highlighted JSON output in their roadmap. This means the OCR service may directly return JSON with text content and layout metadata. As of now, one common approach is to get Markdown and then transform it.

The presence of an images list per page also provides coordinates (top_left_x, etc.) for each image region. This can be useful if you need to post-process or analyze how content was laid out (for example, determining where on the page an image was located).

Integration with Other Frameworks and Pipelines

Mistral OCR truly shines when integrated into larger AI pipelines. Because it not only extracts text but also “understands” documents, it pairs well with language models, vector databases, and retrieval/QA frameworks. Here we explore how you can integrate Mistral OCR with tools like LangChain and Retrieval Augmented Generation (RAG) pipelines to build end-to-end applications.

Mistral OCR + LLMs (Doc-as-Prompt): One of the standout features is document-as-prompt functionality. This allows you to feed an entire document (or parts of it) into a prompt for a large language model. In practice, Mistral’s API supports mixing documents with text prompts in a single request. For example, using the Mistral chat completion API, you can do something like:

messages = [

{

"role": "user",

"content": [

{"type": "text", "text": "Explain this document in plain language:"},

{"type": "document_url", "document_url": "https://arxiv.org/pdf/2310.06825"}

]

}

]

chat_response = client.chat.complete(model="mistral-small-latest", messages=messages)

print(chat_response.choices[0].message.content)

In this call, we provided a user prompt asking for an explanation, and we attached a document (via URL) as part of the message content. Behind the scenes, the Mistral service will run OCR on the document and feed the extracted content to the mistral-small-latest chat model. The result (chat_response) is the language model’s answer (in this case, a detailed explanation of the document). This seamless handoff between OCR and LLM means you can ask questions about a document, summarize it, or extract specific information without manually gluing together OCR output and prompt – the API does it for you. This is extremely powerful for building document Q&A or summarization features. For example, you could supply a legal contract and prompt, "List the termination conditions in this contract," and get an answer derived from the text.

LangChain Integration: If you are building with LangChain (a popular framework for chaining LLM operations), you can incorporate Mistral OCR in a couple of ways. The straightforward way is to use Mistral OCR as a preprocessing step: create a custom DocumentLoader that uses client.ocr.process to turn PDFs or images into text before they enter the LangChain pipeline. In fact, Mistral provides community-supported LangChain integration classes. For example, there is a langchain_mistralai module that offers a ChatMistralAI LLM wrapper and MistralAIEmbeddings for vector stores. In a RAG setup with LangChain, you might do something like:

- Use Mistral OCR to convert documents to text (and perhaps split into chunks if large).

- Use

MistralAIEmbeddingsto get embeddings for those chunks and store them in a vector database (FAISS, Pinecone, etc.). - At query time, use

ChatMistralAIas the LLM to answer questions, retrieving relevant chunks via LangChain’s retrieval chain.

This way, you're using Mistral’s ecosystem end-to-end: OCR for text extraction, Mistral’s embedding model for vector search, and a Mistral LLM for generation. The Mistral documentation even provides examples for RAG with LangChain and other libraries.

Document Q&A and RAG Pipelines: Retrieval-Augmented Generation (RAG) refers to systems where an LLM is augmented with an external knowledge source (like documents). Mistral OCR extends RAG to multimodal RAG – meaning your knowledge source can include scanned documents and images, not just text files. In a typical pipeline:

- You use Mistral OCR to ingest a batch of documents (say a thousand scanned pages of corporate reports).

- The output (text + structure) is indexed in a vector database with embeddings.

- A user query goes to the vector DB to find relevant portions.

- The retrieved text (which came from OCR originally) is fed into an LLM to generate the answer.

Mistral OCR ensures that even if the source was a PDF with charts and tables, the content isn’t lost to the LLM. It preserves the context that a raw text extractor might miss. Users have noted that integrating Mistral OCR into RAG unlocks new use cases, since previously inaccessible data in PDFs can now be part of your AI's knowledge base.

Other Frameworks: Beyond LangChain, you can use Mistral OCR with alternatives like LlamaIndex (formerly GPT Index) or Haystack. For instance, Haystack has a concept of file converters – one could create a Mistral OCR file converter to integrate into a Haystack pipeline for document search. Similarly, LlamaIndex can ingest documents via a custom loader; by using Mistral OCR in that loader, you allow LlamaIndex to work with image-based documents. Essentially, any place you need OCR in an AI workflow, Mistral OCR can drop in as a superior replacement for legacy OCR engines.

API Integration: If not using a specific framework, you can always call the Mistral OCR API directly from your backend or pipeline code. It’s a RESTful API (HTTPS endpoint) under the hood, so even outside Python you can integrate it (they provide a TypeScript client and cURL examples as well). Many developers wrap these calls in microservices. For example, you might have a small service that takes an image, calls Mistral OCR, and returns JSON – which your main application can call when needed. The outputs (JSON/Markdown) are easily passed between systems, so integrating with enterprise workflows (like uploading results to a database, or triggering other processes) is straightforward.

Advanced Features and Fine-Tuning

Beyond the basics, Mistral OCR offers advanced features that enable customization and more sophisticated uses:

Doc-as-Prompt and Combined Pipelines: (Reiterating this feature in advanced context) The ability to treat an entire document as part of the prompt input is a game-changer. Under the hood, Mistral’s platform is doing a lot of heavy lifting – it runs the OCR, chunks the content if needed, and feeds it to the language model in a controlled way so that you can ask something like "Summarize the attached 50-page report" and get a result. This saves developers from having to manually handle long documents or worry about the prompt token limits, as the system likely handles it via streaming or iterative prompting. To use doc-as-prompt effectively, consider the following tips:

- Use it for tasks like Q&A, summarization, or extraction of specific fields from documents. For example, "Extract all the tables from the attached document and return as JSON." Mistral OCR will get the text and the LLM will output the structured data.

- Be mindful of document size relative to model context. Extremely large documents may require either using a larger context model or summarizing in sections. You might break a very long document into sections and process sequentially if needed.

- You can combine multiple documents in one prompt (the content array accepts multiple items). This could allow comparative questions (though that may be limited by the interface).

Structured Output and Parsing: Mistral’s ecosystem has the concept of structured outputs. For instance, the Mistral chat API can directly output to a Pydantic model (response_format=YourSchema) using a feature similar to function calling. In the OCR context, a clever technique is to first run OCR to get Markdown, and then use a second call to parse that into JSON. An example of this pattern, inspired by an Analytics Vidhya demo, is:

from pydantic import BaseModel

class StructuredDoc(BaseModel):

# Define the fields you want, e.g.:

title: str

tables: list[dict]

# ... etc.

# After getting OCR markdown:

doc_text = ocr_response.pages[0].markdown # (for simplicity, one page example)

# Now ask the LLM to parse it

parse_response = client.chat.parse(

model="mistral-large-latest", # use a larger model for parsing if needed

messages=[

{"role": "user", "content": [

{"type": "text", "text": f"Here is a document:\n<BEGIN_DOCUMENT>\n{doc_text}\n<END_DOCUMENT>\nExtract the title and tables as a JSON."}

]}

],

response_format=StructuredDoc

)

structured_result = parse_response.choices[0].message.parsedIn this hypothetical example, the chat.parse method is used with a Pydantic schema StructuredDoc which tells the model the JSON structure we expect. The prompt includes special tokens <BEGIN_DOCUMENT> etc., to clearly delineate the document text. The result structured_result would be directly a StructuredDoc object (or it could be converted to dict/JSON). The Mistral API’s support for function calling/structured outputs means the model is constrained to output valid JSON as per the schema. This is extremely useful for extracting structured data (say key fields from an invoice or form) automatically.

Fine-Tuning: Fine-tuning Mistral OCR itself (the vision-text model) is not something end-users can easily do at the moment, since it’s provided as a service. However, the Mistral platform allows fine-tuning of their base language models for custom needs, which could indirectly improve OCR-based pipelines (for example, fine-tuning a chat model to better understand domain-specific documents after OCR). The OCR model in Mistral’s API is updated by the Mistral AI team (the model name “mistral-ocr-latest” will automatically point to the newest version). If you have specialized documents (e.g., blueprints, or documents with unusual fonts), and you need better performance, you could reach out to Mistral about custom solutions or self-host the model to fine-tune it if/when they allow. Since Mistral-7B is open source, one approach could be to fine-tune an open vision-language model on your data – but that requires considerable expertise and isn't trivial.

It's worth noting that some community efforts exist to fine-tune Mistral models for OCR tasks. For example, one project uses Mistral-7B to convert OCR outputs into structured JSON for receipts – essentially splitting the problem: use a traditional OCR for character detection, then use Mistral LLM to structure it. While not fine-tuning the OCR model itself, it demonstrates the flexibility of the ecosystem.

Customization Options: Out of the box, the OCR API has a few parameters:

include_image_base64(as we saw) to include images.- You can also specify which pages of a PDF to process, if you don't need the entire document (by providing a

page_rangesparameter in thedocumentobject, according to the docs). - If you only want text and no Markdown formatting, one approach is to strip Markdown from the output in post-processing. Currently, the API doesn’t have a toggle for “plain text” vs “markdown”, it defaults to markdown for fidelity – the OCR response likely tags detected languages in metadata).

Self-Hosting: For organizations that require data to never leave their infrastructure (banks, government, etc.), Mistral AI has announced an on-premise option for the OCR model. This likely involves running the model on your own servers/GPUs. The self-deployment might use frameworks like vLLM or Hugging Face’s Text Generation Inference (TGI) which are mentioned as supported backends. Self-hosting would allow fine-tuning or custom model updates, but it requires significant machine resources (the model is large and multimodal). The benefit is complete control and privacy.

In summary, Mistral OCR provides not just an API endpoint, but a platform capability that can be woven into complex AI workflows. From building an AI agent that can read and answer questions about scanned documents, to automating data extraction with structure, the advanced features enable a high degree of automation. As you grow with the tool, you might use these features to streamline tasks that once required multiple separate OCR and post-processing steps.

Real-World Examples and Use Cases

Mistral OCR’s capabilities lend themselves to numerous real-world applications. Let’s look at how different industries and domains can leverage this technology:

- Financial Services (Invoices and Receipts): Finance departments and fintech apps deal with mountains of invoices, receipts, and statements. Mistral OCR can accurately extract line items, tables of transactions, dates, and totals from these documents. For example, an expense management system could use it to turn photographed receipts into structured records (merchant, amount, date, etc.), feeding directly into accounting software. Because of the high accuracy across varied document layouts, errors (which could be costly in finance) are minimized. The speed of processing also means even large batches of invoices (say end-of-month processing) can be automated quickly.

- Legal Industry (Contracts and Court Documents): Legal documents are often long and dense, with critical information scattered throughout. Using Mistral OCR, law firms and legal tech companies can digitize contracts, leases, case files, and more, while preserving headings, clauses, and formatting. This structured text can then be searched or analyzed. For instance, a law firm could build a database of past case judgments by scanning PDFs and then query them with an LLM for relevant precedents. The context-awareness of Mistral OCR ensures that section headings like "Section 5: Termination" are captured as such, making it easier to automatically identify and extract specific clauses. Given that legal documents may contain tables (e.g., schedule of payments) or signatures, the ability to handle those elements is a big plus.

- Healthcare (Medical Records and Forms): Healthcare generates a mix of printed and handwritten documents – patient intake forms, doctor’s notes, lab reports, prescriptions. Mistral OCR’s multimodal prowess (including some handwriting recognition) is valuable here. It can transform these into text that hospital information systems can use. For example, a doctor's scrawled notes can be partly transcribed, and then a medical language model could summarize the key observations. Privacy is crucial in healthcare, so an on-prem deployment of Mistral OCR might be used in hospitals to ensure data never leaves their secure network. Moreover, the support for many languages is useful in multilingual patient communities (e.g., recognizing both English and Spanish text in the same medical form).

- Logistics and Transportation: This industry runs on forms and documents – bills of lading, packing lists, customs declarations, shipping labels – often printed or faxed. Mistral OCR can digitize these documents so that logistics management systems can track shipments without manual data entry. For instance, extracting addresses, product codes, and quantities from a packing list can update inventory automatically. Complex layout is a factor here (tables of items, multi-column manifests) which Mistral OCR handles gracefully, preserving the rows and columns of tables in Markdown format. Faster OCR means quicker turnaround in supply chain decisions (e.g., customs paperwork can be processed in bulk within minutes instead of days).

- Research and Education: Researchers often deal with scanned PDFs of papers, old books, or notes. Mistral OCR can be used to create a personal digital library where every document is searchable. Because it retains equations, references, and figure captions, it’s extremely useful for scientific papers. A researcher could OCR a stack of journal articles and then query them (with an LLM) for specific findings or even ask for a summary of each paper’s results. In education, teachers or students could scan textbook pages or handwritten class notes and use Mistral OCR to digitize them. One example use-case is building a study guide: feed textbook chapters and lecture notes in, then ask an LLM (via Mistral’s chat) to generate quiz questions or explain concepts – essentially creating a custom tutor out of your own materials.

- Document Digitization & Archives: Museums, libraries, and government archives often have vast collections of paper documents (historical records, letters, etc.). Mistral OCR can assist in large-scale digitization projects, turning these into text while preserving formatting. Its multilingual support is crucial here, as archives often contain documents in various languages or old fonts. By converting these to text, archives can be made searchable online, unlocking information that was previously difficult to access. For example, a national archive could OCR decades of newspaper scans and then allow researchers to search for articles by keyword or date. Because Mistral OCR keeps the structure, even things like article titles and columns in a newspaper page would be delineated.

These examples illustrate the versatility of Mistral OCR. Whether it’s a niche application like reading mathematical content in research papers or a broad one like processing invoices, the tool adapts to the content. In all cases, the common theme is automation of understanding – taking documents that used to require human reading and making their content immediately usable by software. This can save countless hours of manual data entry and enable new capabilities (like querying a pile of documents with an AI assistant).

Community Feedback and Ecosystem

Since its launch, Mistral OCR has generated significant buzz in the AI developer community. Early adopters have shared impressive demos as well as constructive critiques, forming a growing ecosystem around the tool.

General Sentiment: The overall sentiment is that Mistral OCR is a breakthrough in document AI. Developers have praised its accuracy on complex layouts and the convenience of the Markdown output which keeps the document structure intact. Many note that it "just works" on content where previous OCR solutions struggled (like mixed text and image pages or odd formatting). The fact that it can handle many languages out-of-the-box is another point of excitement, as it reduces the need for multiple OCR tools.

Mistral AI’s decision to open-source their base models (like Mistral 7B LLM) has also endeared them to the community. While the OCR model itself is accessed via API, the philosophy of openness and the strong technical performance have drawn comparisons to big players (some wonder if this could rival proprietary services from Google, Microsoft, etc., given the benchmarks). The pricing being relatively affordable was also received positively, as it lowers the barrier for startups to incorporate it.

User Reviews and Discussions: On platforms like Hacker News and Reddit, developers shared experiences. In one HN discussion, a user ran benchmarks and found Mistral OCR to be “an impressive model” albeit noting that OCR is a hard problem and cautioning about the risk of hallucinations or missing text with LLM-based approaches. This highlights an important point: because Mistral uses an LLM under the hood, if the model is uncertain it might omit or slightly alter text, unlike rule-based OCR which might show gibberish but not "invent" text. In critical applications, this means outputs should be validated – a sentiment echoed by some early users. Overall, however, no one disputed the step-change in capability Mistral OCR provided; the discussion was more about edge cases and how to best evaluate OCR quality.

Another community observation is the comparison to traditional OCR followed by an LLM. Some have suggested pipelines where a conventional OCR (like Tesseract) gets raw text and then an LLM cleans or structures it. Mistral OCR essentially merges those steps, which most find convenient, though a few purists debate evaluation methodologies (one user pointed out challenges in using an LLM to judge OCR quality, preferring direct comparison to ground truth text). Mistral AI team members responded in discussions, sharing that they benchmark using structured outputs and ground truth to measure accuracy in a robust way.

Ecosystem and Community Projects: Since release, several tutorials, blog posts, and even open-source projects have emerged:

- Tutorials & Guides: We’ve referenced a detailed DataCamp tutorial and an Analytics Vidhya blog, which walk through practical usage of Mistral OCR in applications. These resources indicate a healthy interest and help new users get up to speed quickly. Medium articles (such as "Mistral OCR: The Must-Have Tool for Efficient Document Processing") highlight the key features and share simple code snippets. This wealth of write-ups shows that the developer community is actively experimenting and teaching others.

- GitHub and Hugging Face: Community-contributed code is appearing on GitHub. For example, there are demo repositories that integrate Mistral OCR into web apps (some on Hugging Face Spaces) showcasing a web UI to upload a PDF and get the OCR result. Issues and discussions on the

mistralaiGitHub (the SDK) have been reported and resolved, indicating the Mistral team is responsive (e.g., compatibility issues betweenmistralaiand other packages were discussed). On Hugging Face Hub, while the actual OCR model weights are not published, there are related models (like fine-tuned Mistral LLMs for OCR post-processing tasks as mentioned earlier) which indicate experimentation.

- Community Sentiment: On Mistral’s Discord and forums, users are sharing tips and asking for new features (such as direct table extraction or better handling of certain image types). The sentiment is largely enthusiastic; many see Mistral OCR as filling a crucial gap for enabling multimodal LLM applications. It’s often described as part of a larger ecosystem – meaning developers look at it alongside Mistral’s text generation models, seeing the potential for an all-Mistral solution (OCR + LLMs + embeddings) that is open and high-performance.

It's also worth noting that Mistral OCR was introduced around the same time as some other notable models (e.g., OpenAI released a vision feature in GPT-4, etc.), so there's a lot of interest in comparing approaches. Some on Hacker News compared Mistral OCR to those, and while direct comparison is hard without equal access, the fact that Mistral's solution is available for self-hosting and via API at reasonable cost is a huge differentiator for many (no waiting list or tiered access – you can get started today with an API key).

In summary, community feedback has validated Mistral OCR as a top-tier tool for document processing. The ecosystem around it – including integrations with popular libraries (LangChain, etc.), and the support from Mistral AI (documentation, SDKs, open discourse) – is growing rapidly. Users are excited, but also realistic that OCR is not perfect; they emphasize using it thoughtfully and keeping an eye on any errors in critical scenarios. Given the active involvement of both users and the Mistral team, we can expect the tool to improve steadily and address many of the early feedback points.

Best Practices and Tips for Using Mistral OCR

To get the most out of Mistral OCR in your projects, consider the following best practices and tips, distilled from documentation and early users’ experiences:

- Optimize Document Quality: While Mistral OCR is very capable, input quality still affects output. For scanned documents:

- Ensure they are not skewed or upside-down (if they are, consider pre-processing with an image library to deskew or rotate).

- If an image is very dark or has noise (e.g., scanned old paper), simple preprocessing like converting to grayscale or increasing contrast can help. (Traditional techniques like OpenCV’s thresholding can reduce background noise and improve text clarity before sending to OCR.)

- Higher resolution images yield better accuracy – if you have a choice, provide images that are at least 300 DPI scans or clear photographs.

- Leverage Markdown Structure: The Markdown output is rich. Use it! Instead of just stripping to plain text, you can:

- Render the Markdown to HTML for a quick viewer of the OCR result (useful for validating output visually).

- Parse the Markdown to identify elements (e.g., use a Markdown parser library to iterate over headings, tables, etc.). This can be an intermediate step to structured data. For example, if you need all bullet points from a document, you could parse the Markdown AST to find list items.

- Be aware that images in Markdown are referenced by filenames (e.g.

). If you asked for base64 images, make sure to save them with those filenames so that the Markdown links are resolved.

- Batch Processing for Scale: If you have a large number of documents, consider batching requests. The Mistral API supports batch inference, which can be more efficient and cost-effective. Rather than calling the API 1000 times for 1000 pages, there might be a way to send a multi-document request or at least process multi-page PDFs in one go (which it already does). Check the latest API docs for batch endpoints or concurrency limits. At the very least, you can run multiple

client.ocr.processcalls in parallel (depending on your rate limit) to speed up throughput. Mistral’s pricing note indicates that batch processing effectively halves the cost per page, so using it could save money.

- Handling Errors and Timeouts: As with any external API, be prepared to handle exceptions. If a network issue occurs or if the service returns an error (e.g., due to an unsupported file format or an internal error):

- Implement retries with backoff for transient errors.

- Use try/except around your

client.ocr.processcalls. The SDK likely throwsMistralException(as suggested by the import in some demo code).

- For very large documents, if the processing is taking too long, you might get a timeout. In such cases, consider splitting the document (e.g., OCR each section or 100 pages separately). This can also help if you encounter memory limits on extremely big outputs.

- Use Language Detection (or Specification): Mistral OCR will auto-detect language, which is generally great for multi-language documents. If you know your document’s language beforehand and it’s consistently failing to recognize some text, it might be worth checking if language hints can be given. Currently, the API doesn’t have an explicit language parameter, but one strategy is to run OCR and then use a language detection library on the output to verify the language. If you expect only a certain language, you could post-process anything that looks out of place. In multi-language scenarios, Mistral OCR can list detected languages in the metadata. Make use of that if relevant (for example, route French text to a French-specialized process if needed, or just log it for info).

- Combine with Traditional OCR if Needed: In rare cases where absolute fidelity of text is critical (like OCRing a serial number or code where even a small hallucination is unacceptable), you might consider a hybrid approach. For example, use Mistral OCR for the bulk of the document, but also run a traditional OCR on specific fields and cross-verify. Some users noted that LLM-based OCR might occasionally omit something it’s not confident about. If you have ground truth data or can do a validation pass (e.g., check that a total sum in an invoice matches the sum of line items), implement those checks. This isn’t unique to Mistral – any OCR can make mistakes – but because Mistral’s output is so clean, you might be tempted to trust it blindly. For high-stakes data, trust but verify.

- Memory and Post-Processing Considerations: The object returned by

client.ocr.processmight be large (imagine a 300-page PDF with images included – it could be hundreds of MBs of data if images are embedded). Be mindful of memory usage:- If you only need text, you might not set

include_image_base64to True, which keeps responses lighter. - Write out results (text and images) to disk incrementally if processing many pages, instead of holding everything in memory.

- If you plan to send the OCR text into another LLM, consider the context length. For instance, Mistral’s own chat models or others like GPT-4 have token limits. You might summarize or chunk the text if it's too large to send in one go.

- If you only need text, you might not set

- Use Structured Output Features: Whenever possible, use the

response_formator function calling features to have the model structure the data for you. This reduces error-prone string parsing in your code. For example, if you want an address block parsed into{"street": ..., "city": ..., "zip": ...}, you can prompt the model accordingly and enforce a format. Mistral’s platform allows you to define these schemas, which is a big time saver for building information extraction workflows. Experiment with this in a controlled setting to ensure the model stays within format (the temperature=0 setting can help make outputs more deterministic for parsing tasks).

- Stay Updated and Engage: Mistral AI is actively updating their models and SDK. Keep an eye on the Mistral OCR documentation and changelog for new features or improvements. Join their Discord or forums to ask questions – often best practices come directly from the developers or other users who faced similar challenges. Given how fast this space moves, today’s best practice might be superseded by a new feature tomorrow (for instance, if they release an explicit table extraction mode or a new, larger OCR model).

By following these tips, you can ensure smoother integration and more reliable outcomes when using Mistral OCR. Essentially, treat Mistral OCR as a powerful component: feed it good data, handle its outputs carefully, and it will drastically reduce the manual effort in your document processing tasks.

Conclusion and Outlook

Mistral OCR represents a significant leap forward in the AI-driven processing of documents. In this deep dive, we covered its core capabilities – from extracting text with layout preservation to integrating with downstream systems for question-answering and structured data output. We explored how to set it up, use it on various inputs (PDFs, images, etc.), and combine it with frameworks like LangChain to build intelligent applications. We also delved into advanced techniques like using documents as prompts and enforcing structured outputs, and discussed real-world applications across industries.

The key takeaway is that Mistral OCR is not just an OCR engine; it's a holistic document understanding service. It bridges the gap between unstructured raw documents and the structured world of databases and AI models. For developers and data scientists, this means previously unapproachable data (like a scanned contract or a printed report) can now be seamlessly incorporated into pipelines, searchable knowledge bases, or interactive AI agents.

Outlook: Going forward, we can expect Mistral OCR to continue improving. Mistral AI has hinted at continuously training and refining their models (“mistral-ocr-latest” will evolve), so accuracy with difficult inputs will get even better. We might see support for even more complex outputs (for example, directly getting an HTML or a Word document with the same formatting). The mention of top-tier benchmarks and fastest in its category in their announcements suggests they are pushing the envelope on both quality and speed, possibly integrating newer techniques or larger multimodal models.

In the broader AI ecosystem, Mistral OCR fits in as a crucial component for multimodal AI. Large Language Models are getting better at language reasoning but need tools like OCR to feed them information from the physical world of documents. Mistral’s vision to build an open-source-driven AI stack is evident – their LLM (Mistral 7B and beyond) is open, and they are combining it with vision (OCR) and other capabilities. We might see more unified models (e.g., a future where the OCR and the LLM are one system that directly answers from images, akin to what some multimodal GPT-4 can do). Mistral OCR could also become more tightly integrated with vector databases and automations – imagine an out-of-the-box pipeline where you point it to a folder of documents and it creates a fully indexed QA system.

For now, the integration with Hugging Face and other partners will make it more accessible. Soon you may not even need to use the Mistral SDK – you could load a model from Hugging Face or use an inference API call to get OCR results, which will broaden adoption. The promise of on-prem deployment also means it could become the go-to solution for enterprises that previously relied on older OCR systems like Tesseract or Abbyy FineReader, offering them an upgrade in intelligence without compromising security.

In conclusion, Mistral OCR is a powerful tool that transforms how we handle documents in the age of AI. It empowers applications to see and read documents with a level of understanding that was previously unattainable without building complex pipelines. By incorporating Mistral OCR, developers and organizations can unlock insights from their troves of PDFs and images, automate tedious processes, and build richer AI applications that truly understand their data. The journey of document digitization and analysis is entering a new era with tools like Mistral OCR leading the way, and it's an exciting space to watch (and participate in) for anyone involved in AI and data engineering.

Cohorte Team

April 2, 2025