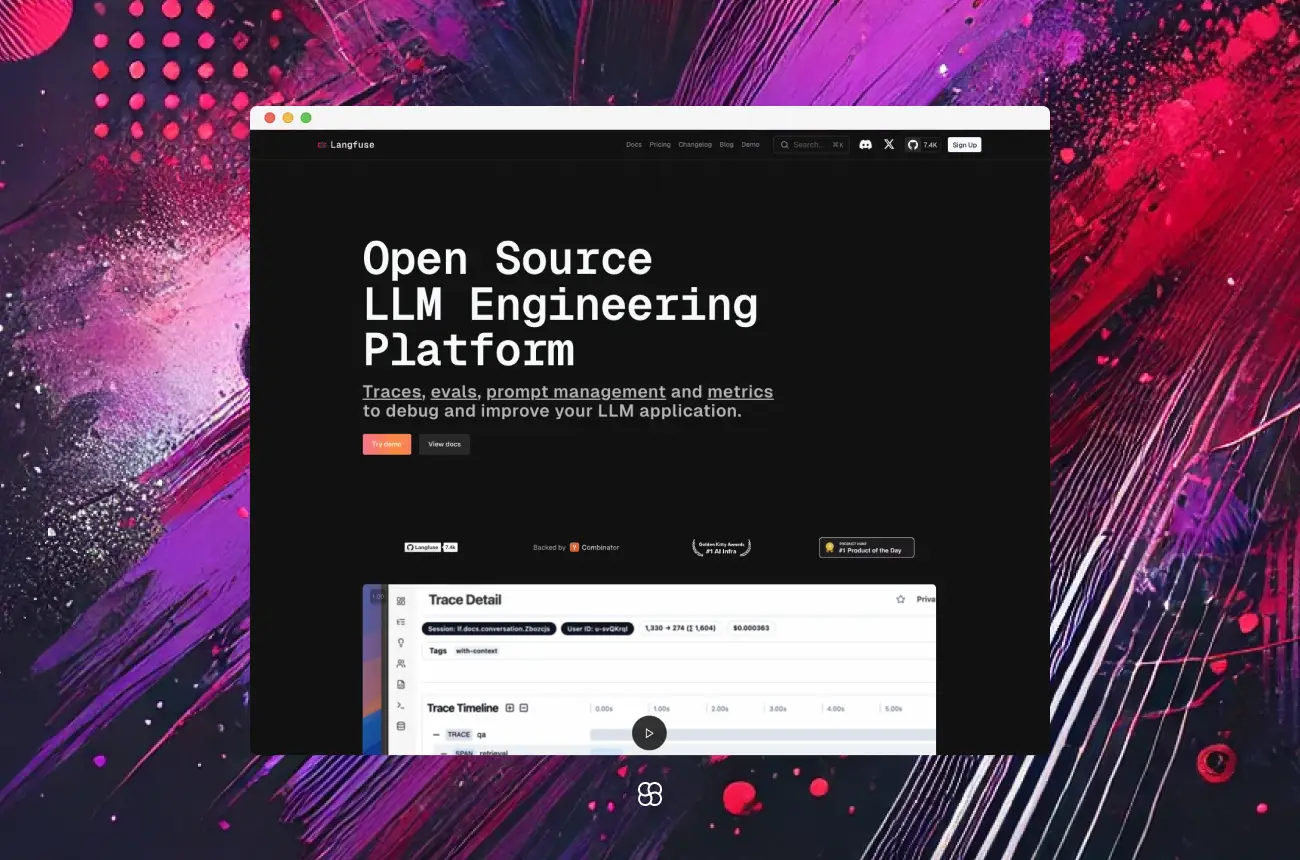

Langfuse: The Open-Source Powerhouse for Building and Managing LLM Applications

Langfuse is an open-source platform designed to enhance the development and management of Large Language Model (LLM) applications. It offers tools for observability, prompt management, evaluation, and more, simplifying the complexities involved in building LLM-based solutions.

Why Langfuse?

Langfuse provides several key benefits for LLM application development:

- Open Source: Langfuse is open source, allowing for customization and self-hosting.

- Platform Agnostic: It integrates seamlessly with various models and frameworks, including LangChain and LlamaIndex.

- Detailed Metrics: Langfuse offers in-depth insights and metrics to improve your LLM applications.

- Real-time Monitoring and Evaluations: It enables real-time monitoring and evaluation to keep track of your models’ performance.

- Scalability: Built to scale with your projects, Langfuse handles projects of all sizes, from small to enterprise-level needs.

In comparison, LangSmith is a closed-source platform developed by the LangChain team, integrating closely with the LangChain framework. While LangSmith offers robust tools for debugging and monitoring LLM applications, it requires a paid license for self-hosting and may not provide the same level of flexibility and customization as Langfuse.

Therefore, if you’re working exclusively with LangChain, LangSmith might be suitable. However, for broader integrations, customization, and the ability to self-host without additional costs, Langfuse could be the better choice.

Getting Started with Langfuse

Installation and Setup

1. Install the Langfuse and OpenAI Packages:

Ensure you have Python installed, then run:

pip install langfuse openai2. Set Up Environment Variables:

Configure your environment with the necessary API keys and host information:

import os

os.environ['LANGFUSE_SECRET_KEY'] = 'your_secret_key'

os.environ['LANGFUSE_PUBLIC_KEY'] = 'your_public_key'

os.environ['LANGFUSE_HOST'] = 'https://cloud.langfuse.com'

os.environ['OPENAI_API_KEY'] = 'your_openai_api_key'3. Initialize Langfuse:

Initialize the Langfuse client in your application:

from langfuse import Langfuse

langfuse = Langfuse()First Steps and First Run

1. Define Your Function with the @observe Decorator:

Use the @observe decorator to trace your function:

from langfuse.decorators import observe

from langfuse.openai import openai

@observe()

def generate_story():

response = openai.ChatCompletion.create(

model="gpt-3.5-turbo",

messages=[

{"role": "system", "content": "You are a great storyteller."},

{"role": "user", "content": "Once upon a time in a galaxy far, far away..."}

]

)

return response.choices[0].message.content2. Execute Your Function:

Call your function to generate a story:

story = generate_story()

print(story)3. View Traces in Langfuse Dashboard:

After running your application, log in to your Langfuse dashboard to view the trace of your function execution.

Examples of Application

Example 1: Summarization Application

Here’s how you can build a summarization application using Langfuse and OpenAI:

from langfuse.decorators import observe

from langfuse.openai import openai

@observe()

def summarize_text(text):

response = openai.ChatCompletion.create(

model="gpt-3.5-turbo",

messages=[

{"role": "system", "content": "You are a summarization assistant."},

{"role": "user", "content": f"Summarize the following text: {text}"}

]

)

return response.choices[0].message.content

summary = summarize_text("Your long text here.")

print(summary)Example 2: Question-Answering Application

Implement a question-answering system:

from langfuse.decorators import observe

from langfuse.openai import openai

@observe()

def answer_question(question):

response = openai.ChatCompletion.create(

model="gpt-3.5-turbo",

messages=[

{"role": "system", "content": "You are a knowledgeable assistant."},

{"role": "user", "content": question}

]

)

return response.choices[0].message.content

answer = answer_question("What is the capital of France?")

print(answer)Expert Insights

Developers praise Langfuse for its precision and ease of use.

Mava, an AI company, reported massive time savings in debugging and optimizing their LLMs by using Langfuse. It replaced guesswork with clarity—offering real-time insights on performance, costs, and model behavior.

With its open-source flexibility and scalability, Langfuse has become a trusted tool for companies looking to build better, faster, and smarter AI systems.

Final Thoughts

Langfuse streamlines the development of LLM applications by providing essential tools for observability, prompt management, and evaluation. Its open-source nature and seamless integration with various models and frameworks make it a valuable asset

Cohorte Team

December 24, 2024