How to Build a Custom MCP Server with Gitingest, FastMCP & Gemini 2.5 Pro

This is how to rapidly generate a fully functional Model Context Protocol (MCP) server by combining Gitingest’s repo ingestion, FastMCP’s Python framework, and Gemini 2.5 Pro’s code‑generation power. In just a few steps, you’ll pull in any GitHub project as text, scaffold Python endpoints, and deploy locally with zero boilerplate. Perfect for exposing internal APIs, docs, or data stores to an LLM via MCP. Let's dive in.

Why Roll Your Own MCP Server?

- Custom tool endpoints tailored to your codebase or APIs

- Minimal boilerplate thanks to FastMCP’s decorators

- Automated scaffolding with Gemini 2.5 Pro in AI Studio

- Rapid iteration: from repo to running server in minutes

Prerequisites

- Python 3.10+ (with access to

pipxor a virtual environment) - Git (to clone repos)

- AI Studio account with access to Gemini 2.5 Pro

- uv CLI (installed via

pip install uvfor local testing)

1. Install & Run Gitingest

Gitingest turns any GitHub repository into a single prompt‑friendly text file.

# Install via pipx for isolation

pipx install gitingest

# Ingest the FastMCP repo

gitingest https://github.com/jlowin/fastmcp -o fastmcp_source.txt- Output:

fastmcp_source.txtcontains directory structure, READMEs, and source files.

2. Write Your MCP Server (server.py)

Using FastMCP v2.x, define your tools in pure Python. Here’s a minimal example exposing a “directory tree” endpoint:

# server.py

# requirements:

# fastmcp>=2.0

# httpx>=0.27

# rich>=13.7

from fastmcp import FastMCP

import httpx

from rich.tree import Tree

# Initialize the MCP server instance

mcp = FastMCP("GitHub Directory Server")

@mcp.tool()

async def github_directory_structure(

owner: str,

repo: str,

branch: str = "main",

) -> str:

"""

Return the repo’s directory tree in ASCII form.

"""

url = f"https://api.github.com/repos/{owner}/{repo}/git/trees/{branch}?recursive=1"

async with httpx.AsyncClient() as client:

resp = await client.get(url, timeout=10)

resp.raise_for_status()

data = resp.json()

tree = Tree(f"{owner}/{repo}@{branch}")

for entry in data["tree"]:

if entry["type"] == "tree":

tree.add(entry["path"])

return tree.__str__()

if __name__ == "__main__":

mcp.run()Key points:

- Use

@mcp.tool()(with parentheses) to register each endpoint. - Return primitives (

str,list, etc.)—FastMCP handles JSON serialization. - Async I/O via

httpx.AsyncClient()keeps your server non‑blocking.

3. Manage Dependencies & Run Locally

Option A: Inline PEP 723 Block

Simply list your requirements at the top of server.py (shown above), then:

pip install uv

uv run server.pyOption B: Dedicated requirements.txt

fastmcp>=2.0

httpx>=0.27

rich>=13.7pip install -r requirements.txt uv

uv run server.pyFor a richer local dev experience (hot‑reload, inspector UI):

uv init my-mcp

cd my-mcp

uv venv && source .venv/bin/activate

uv pip install fastmcp httpx rich

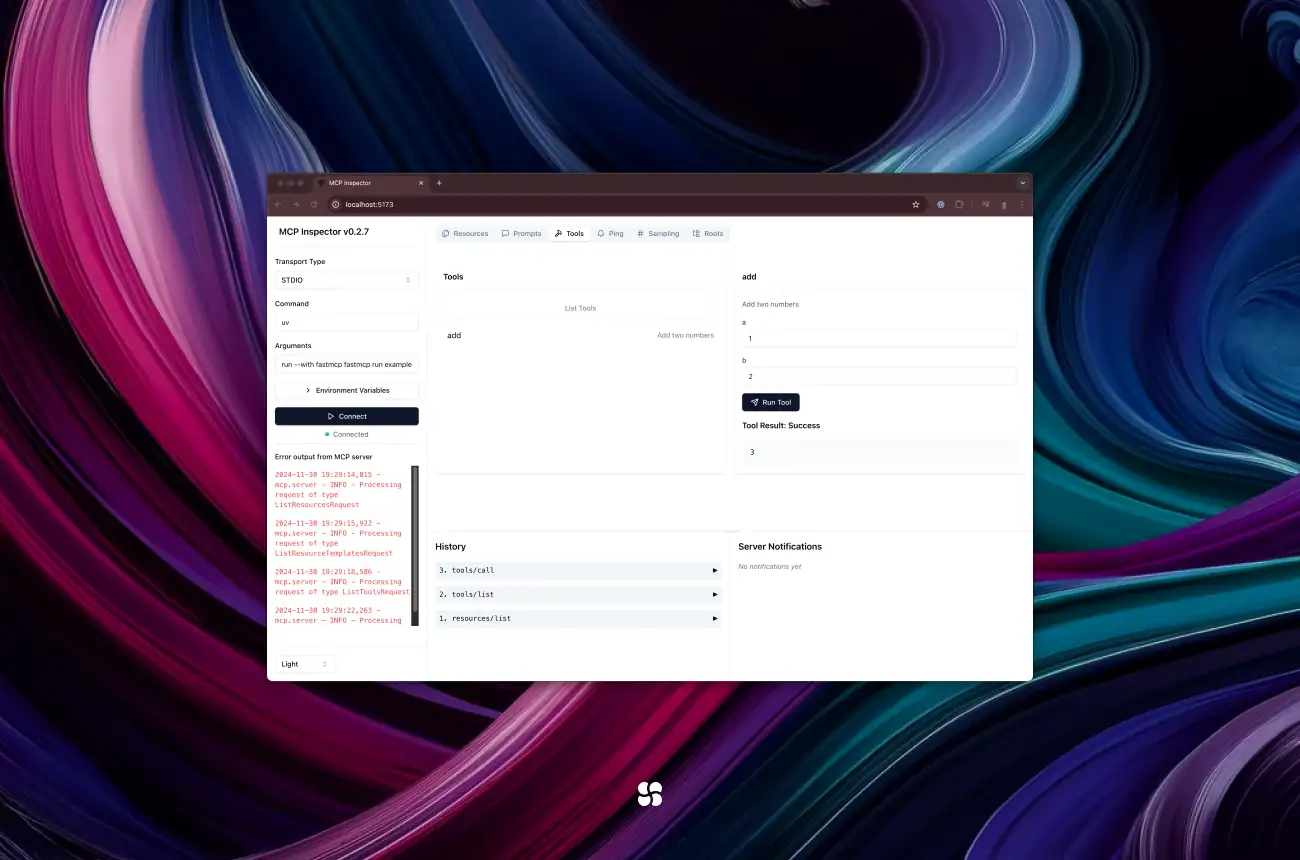

fastmcp dev ../server.py4. Test Your Endpoint

In another terminal, invoke the tool via MCP’s CLI:

uv run mcp call mcp github_directory_structure \

--json '{"owner":"jlowin","repo":"fastmcp"}'You should see an ASCII tree of the fastmcp repo.

5. Upload & Scaffold with Gemini 2.5 Pro

- Upload

fastmcp_source.txtin Google AI Studio’s Files panel. - Copy the returned file ID (e.g.,

files/abcd1234). - Prompt Gemini in a “Code Generation” notebook:

<<file:files/abcd1234>>

Generate a Python MCP server using FastMCP v2.x that:

1. Exposes a github_directory_structure tool.

2. Includes proper @mcp.tool() decorators.

3. Provides requirements.txt and Dockerfile.Gemini 2.5 Pro will emit a multi‑file scaffold you can download, review, and immediately run.

6. Deploying in Production

- Containerize with the generated

Dockerfile. - Switch transports (e.g., HTTP) by wrapping

mcp.appin FastAPI. - Secure endpoints via API keys or OAuth middleware.

Final Checklist

-

gitingestv0.1.4+ viapipx -

server.pyusesFastMCPand@mcp.tool() - Dependencies installed &

uv run server.pysucceeds - AI Studio prompt references the correct file ID

- Local test returns a valid directory tree

Now you have it: a robust, custom MCP server—ready to power any LLM‑based application.

Until the next one,

Cohorte Team

April 17, 2025