Getting Started with Microsoft Phi: Exploring Microsoft’s Latest AI Model Library

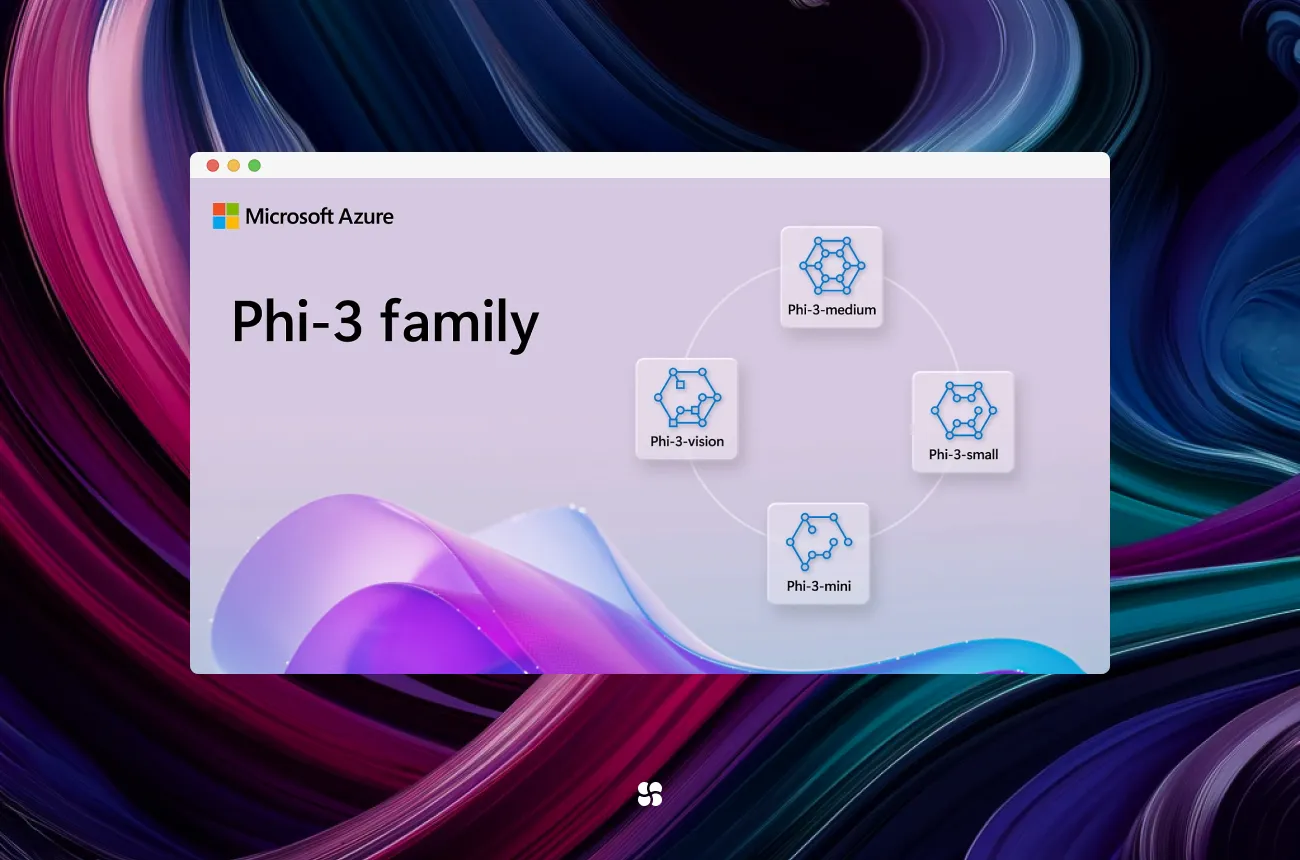

Microsoft Phi is a family of small language models (SLMs) designed to deliver robust performance even on resource‑constrained devices. With variants like Phi‑3‑mini, Phi‑3‑small, and Phi‑3‑medium, the library is optimized for tasks such as text generation, conversational agents, and even vision applications when paired with multimodal capabilities. The framework comes with extensive fine‑tuning options, making it easy to adapt to specific domains while maintaining safety and efficiency.

1. Benefits of Using Microsoft Phi

• Lightweight & Efficient: Despite its small footprint, Phi models deliver performance comparable to much larger models. This allows for mobile and edge deployment.

• Flexibility: With several variants available, users can choose the right model based on the task requirements and available hardware resources.

• Open Source: Licensed under MIT, Microsoft Phi encourages community contributions and rapid innovation.

• Ease of Integration: Built to work with popular libraries like Transformers and Accelerate, integrating Phi into existing data science pipelines or building custom AI agents is straightforward.

2. Getting Started: Installation & First Run

Before diving into agent development, install the necessary Python packages. Open your terminal and run:

pip install -qqq accelerate transformers auto-gptq optimumOnce installed, you can test the model with a simple text completion. For example, create a Python script with the following code:

import torch

from transformers import AutoTokenizer, AutoModelForCausalLM, set_seed

# Set reproducibility

set_seed(2024)

prompt = "Africa is an emerging economy because"

model_checkpoint = "microsoft/Phi-3-mini-4k-instruct"

# Load tokenizer and model (using CUDA if available)

tokenizer = AutoTokenizer.from_pretrained(model_checkpoint, trust_remote_code=True)

model = AutoModelForCausalLM.from_pretrained(

model_checkpoint,

trust_remote_code=True,

torch_dtype=torch.float16,

device_map="cuda" if torch.cuda.is_available() else "cpu"

)

# Tokenize and generate output

inputs = tokenizer(prompt, return_tensors="pt").to(model.device)

outputs = model.generate(**inputs, do_sample=True, max_new_tokens=120)

response = tokenizer.decode(outputs[0], skip_special_tokens=True)

print("Generated Response:")

print(response)This code initializes the Phi‑3‑mini model, tokenizes a sample prompt, and generates a text continuation. It’s a quick way to verify that your installation is working correctly.

3. Step‑by‑Step Example: Building a Simple Agent

Let’s build a simple chatbot agent that responds to user input using Microsoft Phi. This example uses the same setup as above but adds a basic loop for interaction:

import torch

from transformers import AutoTokenizer, AutoModelForCausalLM, set_seed

# Set up reproducibility and model checkpoint

set_seed(2024)

model_checkpoint = "microsoft/Phi-3-mini-4k-instruct"

# Load model and tokenizer

tokenizer = AutoTokenizer.from_pretrained(model_checkpoint, trust_remote_code=True)

model = AutoModelForCausalLM.from_pretrained(

model_checkpoint,

trust_remote_code=True,

torch_dtype=torch.float16,

device_map="cuda" if torch.cuda.is_available() else "cpu"

)

def generate_response(user_input):

prompt = f"User: {user_input}\nAssistant:"

inputs = tokenizer(prompt, return_tensors="pt").to(model.device)

outputs = model.generate(**inputs, do_sample=True, max_new_tokens=100)

return tokenizer.decode(outputs[0], skip_special_tokens=True)

# Simple interactive loop

print("Welcome to the Microsoft Phi Chatbot! (Type 'exit' to quit)")

while True:

user_input = input("You: ")

if user_input.lower() == "exit":

break

response = generate_response(user_input)

print("Assistant:", response)This script creates a basic interactive agent:

1. It formats the conversation with clear “User” and “Assistant” labels.

2. Each user input is tokenized and processed to generate a reply.

3. The agent loop continues until the user types “exit.”

This approach can be extended to integrate additional functionalities like context tracking or incorporating images for multimodal interactions.

4. Final Thoughts

Microsoft Phi represents a significant stride toward democratizing access to advanced AI on modest hardware. Its lightweight design and flexibility make it ideal for developers looking to build customized, efficient AI agents without needing a massive computational budget. If you’re prototyping a chatbot, experimenting with multimodal tasks, or integrating Phi into an enterprise solution, the framework offers a solid foundation with clear documentation and community support. As AI continues to evolve, leveraging models like Microsoft Phi can help you stay agile and innovative in your applications.

Happy coding and exploring!

Cohorte Team

March 5, 2025