Enhancing Knowledge Extraction with LlamaIndex: A Comprehensive Step-by-Step Guide

Enhancing knowledge extraction from unstructured data is crucial for organizations aiming to leverage information effectively. LlamaIndex offers robust tools to facilitate this process, enabling the construction of knowledge graphs that represent entities and their interrelationships. This guide provides a comprehensive, step-by-step approach to utilizing LlamaIndex for knowledge extraction, complete with code snippets and practical insights.

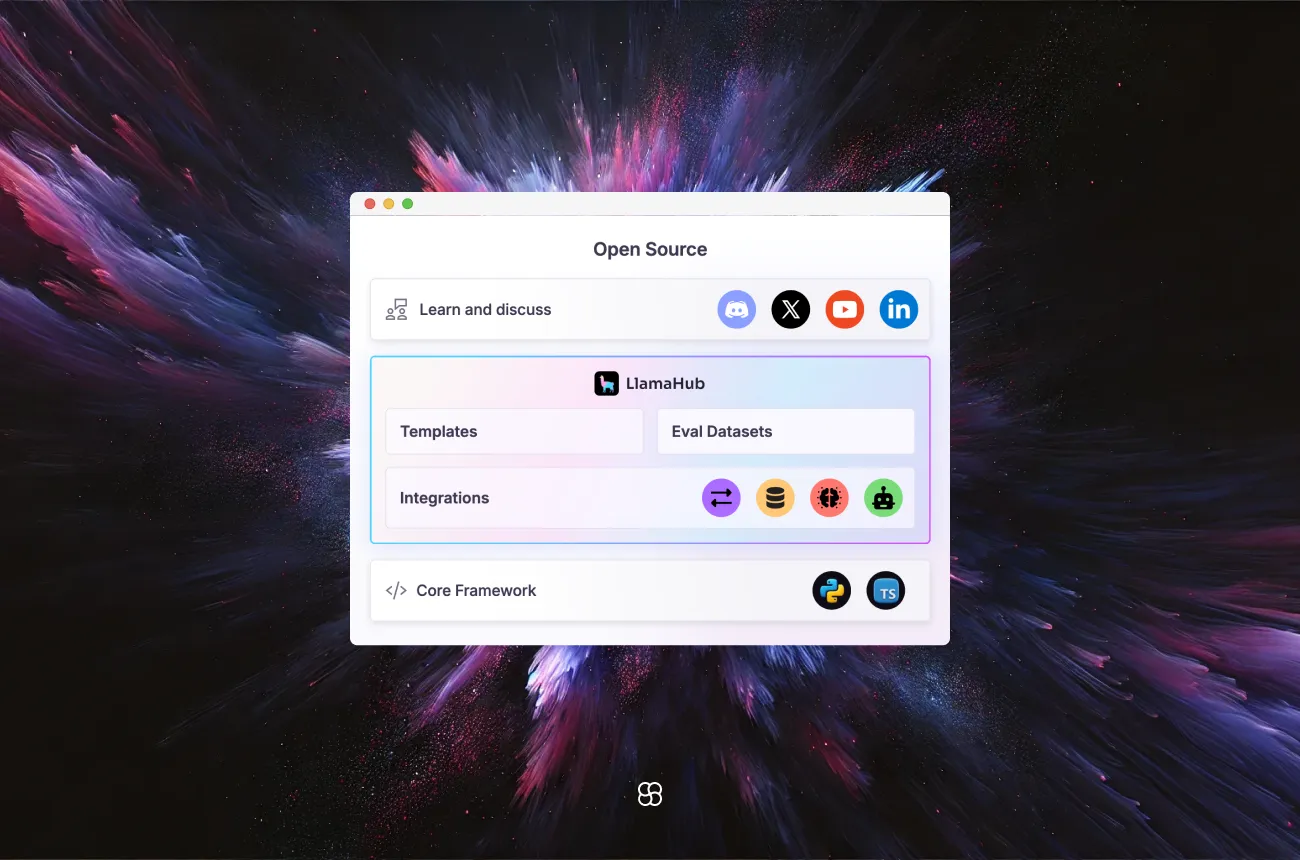

Presentation of LlamaIndex

LlamaIndex is an open-source data orchestration framework designed to assist in building large language model (LLM) applications. It simplifies the ingestion, indexing, and querying of data, thereby enhancing the capabilities of LLMs in handling complex information retrieval tasks.

Benefits

- Automated Knowledge Graph Construction: LlamaIndex facilitates the automated creation of knowledge graphs from unstructured text, identifying entities and their relationships to organize information into a structured format.

- Schema-Guided Extraction: It allows users to define schemas specifying permissible entity types and relationships, ensuring that the extracted knowledge adheres to predefined structures, thereby enhancing relevance and accuracy.

- Natural Language Querying: LlamaIndex enables intuitive querying of the knowledge graph using natural language, making it accessible even to non-technical users.

Getting Started

Installation and Setup

1. Install LlamaIndex:

Ensure you have Python installed. Then, install LlamaIndex using pip:

pip install llama-index2. Set Up OpenAI API Key:

Obtain an API key from OpenAI and set it as an environment variable:

export OPENAI_API_KEY='your_openai_api_key'First Steps

1. Import Libraries:

from llama_index import SimpleDirectoryReader, KnowledgeGraphIndex2. Load Documents:

Assuming your documents are stored in a directory named 'data', load them as follows:

documents = SimpleDirectoryReader('data').load_data()3. Initialize the Knowledge Graph Index:

index = KnowledgeGraphIndex.from_documents(documents)First Run

After setting up, you can start extracting knowledge by querying the index:

query_engine = index.as_query_engine()

response = query_engine.query("What are the key entities and their relationships in the documents?")

print(response)This will output the extracted entities and their relationships based on the input documents.

Step-by-Step Example: Building a Simple Knowledge Extraction Agent

Let's build a simple agent that extracts structured data from unstructured text using LlamaIndex.

1. Define the Schema:

Specify the entity types and relationships you are interested in:

from llama_index import SchemaLLMPathExtractor

entities = ['PERSON', 'ORGANIZATION', 'LOCATION']

relations = ['WORKS_AT', 'LOCATED_IN']

schema = {

'PERSON': ['WORKS_AT'],

'ORGANIZATION': ['LOCATED_IN'],

'LOCATION': []

}

kg_extractor = SchemaLLMPathExtractor(

possible_entities=entities,

possible_relations=relations,

kg_validation_schema=schema,

strict=True

)2. Build the Property Graph Index:

Use the defined schema to construct the knowledge graph:

from llama_index import PropertyGraphIndex

index = PropertyGraphIndex.from_documents(documents, kg_extractors=[kg_extractor])3. Query the Knowledge Graph:

After building the index, you can query it to extract information:

query_engine = index.as_query_engine()

response = query_engine.query("List all persons and their associated organizations.")

print(response)This will provide a structured output of persons and the organizations they are associated with, based on the input documents.

Advanced Applications

Beyond basic knowledge extraction, LlamaIndex offers advanced capabilities:

- Integration with Full-Stack Applications: LlamaIndex can be integrated into full-stack web applications, serving as a backend for data ingestion and retrieval. It can be used in a backend server (such as Flask), packaged into a Docker container, and/or directly used in a framework such as Streamlit.

- Serverless Deployment: LlamaIndex supports building serverless Retrieval-Augmented Generation (RAG) applications, allowing for scalable and efficient deployment. This approach enables applications to handle large volumes of data and user queries without the need for managing server infrastructure.

- Structured Data Extraction: LlamaIndex can extract structured data from unstructured sources, such as identifying names, dates, addresses, and figures, and returning them in a consistent format. This capability is particularly useful for applications requiring data normalization and standardization.

Final Thoughts

LlamaIndex offers a powerful framework for enhancing knowledge extraction from unstructured data. By facilitating the construction of knowledge graphs and enabling natural language querying, it allows organizations to transform raw text into actionable insights. By defining schemas and automating the extraction process, LlamaIndex ensures that the extracted knowledge is both accurate and relevant, making it an invaluable tool for data-driven decision-making.

For more detailed information and advanced use cases, refer to the LlamaIndex Documentation. Additionally, explore the LlamaIndex GitHub Repository for practical examples and further guidance.

Cohorte Team

January 20, 2025